At Platform, we’ve now built and funded a number of startups leveraging Generative AI.

A majority of the pitch decks we receive include some reference to how the startup plans to leverage Generative AI. (2 years ago, it was blockchain, blockchain, blockchain.)

Generative AI is extremely powerful but it’s not a panacea. At least, not yet. So, how do we distinguish between startup opportunities where Generative AI is truly a game-changer versus those where it is simply being wedged in to tick the box?

tl;dr: if you are short on time, you can jump to the end and review my list of evaluation criteria. The remainder of this post explains in more detail how I think about the near-term opportunities Generative AI creates and how I arrived at these criteria.

The Source of the Opportunity

Firstly, let’s look at where the Generative AI opportunity comes from. I’m talking here about Generative AI in the broadest sense, but I do focus more on the textual use cases. In my examples, I’ll use GPT, a Large Language Model (LLM).

LLMs (and related language models) deliver a significant disruption because, for the first time, they allow people to communicate easily with computers using natural language.

Like any innovation, LLMs are built on the shoulders of prior work. Natural Language Processing (NLP) is not new. However, prior NLP approaches struggled to deal with truly natural language because we humans are vague and inconsistent. We imply, we use context, we use idioms, we use different sentence structures, etc.

Without getting too far into the weeds, the reason LLMs like GPT are an inflection point in the development of NLP is a product of their scale, their architecture, and their training.

All three aspects are critical to dealing with the vagueness and inconsistency of human language. All three aspects are also important in understanding the startup opportunity.

The Unstructured Meets the Structured

So far, using any piece of computer software (app, website, etc) has required, to varying extents, the human user having to understand the structure the software requires. The human is required to both enter the information in the format that the software expects, and interpret the results in the format the software provides.

The most obvious case is filling out a form. The user has to understand what the software expects and what it will accept in each field. Even for less structured cases like sending an email, we humans still need to understand, for example, that we have to go to our browser application, navigate to Gmail, and then understand that an email comprises recipients (which may be to, cc or bcc), a subject line, and a body.

The quality of the UX design determined how painful this interaction has been but it remains an essential element of human-computer interaction.

With LLMs, this need to understand system structure can fade into the background. Humans and computers can communicate using human language.

The Two Worlds

Now, let’s remember what computers were already really good at long before Generative AI came along. Computers can store and recall enormous amounts of information and recall it with effectively zero errors. They can do calculations incredibly quickly. These are jobs that we ceded long ago to computers.

So, there’s already an enormous amount of know-how, open source software, and existing systems from the pre-Generative AI era.

For this reason, I see a big part of the near-term opportunity from Generative AI is interfacing between the natural language world of humans and the structured data world of existing systems, letting both domains focus on what they are best at.

In the future, we may be able to rely entirely on the persistence and logic within the language model itself. We won’t even have to think about the interface between the LLM results and the structured data. How long this will take is hard to predict but the recent $100M investment in Pinecone shows that investors are seeing the huge need for this persistence layer within LLMs.

Accelerating and Eliminating the Human “Go Between”

Many jobs performed by humans today involve translating between the unstructured world of human communication and the structured world of traditional software. LLMs are resource expensive but they don’t require food and water or salaries. Now that they can speak human language, the cost and speed of the interfacing between humans and computers is massively reduced.

Some jobs will be entirely eliminated by Generative AI but many will be massively accelerated and also less boring because the most repetitive parts will be augmented by Generative AI assistance. Software engineers who have been using CoPilot have seen that it doesn’t wholly “write code for you.” However, it is much more efficient because it eliminates the need to remember esoteric documentation, syntax, etc.

CAC Reduction

Often, the cost of paying humans to do certain jobs has put a limit on the economic viability of certain aspects of building a startup. This is particularly true when considering the cost of acquiring customers (CAC).

At Platform, we are obsessive about unit economics. One of the biggest reasons that startups fail is that they are unable to acquire customers for less money than those customers end up paying them.

Generative AI presents the possibility to significantly reduce CAC, and can make viable startups that might otherwise have unworkable unit economics.

However, this is tricky! Some of these attempts will inevitably turn into zero sum games. Just as Generative AI can reduce the cost of interfacing with humans, it can equally be used by humans to resist such attempts. For example, it seems inevitable that we’ll end up with Generative AIs sending automated, personalized sales emails fighting Generative AIs trying to identify and reject such emails.

Therefore, I look for some level of asymmetry in the benefit of Generative AI.

Generative AI as a mouse replacement?

I already talk to my Amazon Alexa. I am used to the voice interface. So, it seems obvious that LLMs like GPT will make voice interfaces easier and more natural.

There is also an ongoing generational shift in norms. For example, my kids don’t understand why they can’t press on the screen of my laptop. They are so used to touch screens that it doesn’t make sense that you’d use a device without one.

Will the future be one in which natural language communication is so much the norm that any other form will be inconceivable? Or, are there kinds of activities where a keyboard and mouse are just an inherently better fit?

I think this will take some time to resolve because human behavioral norms and habits are slower to change than the enabling technology.

In the meantime, in assessing startup opportunities, I like to have some reason to believe that users will actually prefer a natural language interface over a traditional UX.

The Power of Context

Another key benefit of LLMs is their ability to use and infer context. Again, this is a result of the combination of scale, architecture, and training.

Unlike a search engine - where you have to know what you are searching for - Generative AI allows for the identification of relevant content based on context, which can be more subtle. Imagine a virtual meeting assistant that doesn’t just make a transcript of what is being discussed in the meeting but also proffers relevant documents and discussions from prior discussions on the same topic, without having to be asked.

That said, Generative AI presents a new art that must be mastered - that of “prompt engineering”, i.e. how to structure the prompt given to the model (be it GPT, Midjourney, DALL-E, etc). This currently requires some non-trivial finesse and experimentation and is relevant in assessing the startup opportunity too. i.e. is the prompt engineering required non-trivial and therefore harder to replicate?

The Power of Scale

The size of LLMs is staggering. GPT-3 has 175 billion parameters. GPT-4 is rumored to have 100 trillion.

In what kind of applications is this scale the most valuable? My view is that the low-hanging fruit is in those areas which relate to large amounts of context-dependent textual data.

The first case we have focused on is law, which famously involves huge amounts of text - statutes, case law, evidence, etc. We are building and funding SIID to leverage Generative AI to massively accelerate the e-discovery process by analyzing evidence, summarizing, assessing sentiment, and identifying key sections.

Another area where huge amounts of context-dependent text is used is medicine. However, here we must be careful because the cost of errors is very high and potentially, fatal.

The Dangerous Downsides

The ability to handle vagueness and ambiguity comes with the possibility of errors. GPT and other LLMs suffer from “hallucinations”; they will simply “make up” answers that are not true.

This is also a product of their architecture and training. OpenAI and others continue to work diligently to address these shortcomings but they are unlikely to be entirely eliminated any time soon.

In some use cases, the cost of an error is trivial. For example, DeckSend uses GPT-4 to analyze fundraising decks to give feedback to founders and match them with investors. If DeckSend accidentally mischaracterizes a Seed round investment deck as an A-Round investment deck, the cost to the participants is not high.

However, I think few people would be comfortable with today’s Generative AI running a nuclear reactor or making healthcare decisions.

Evaluation Criteria

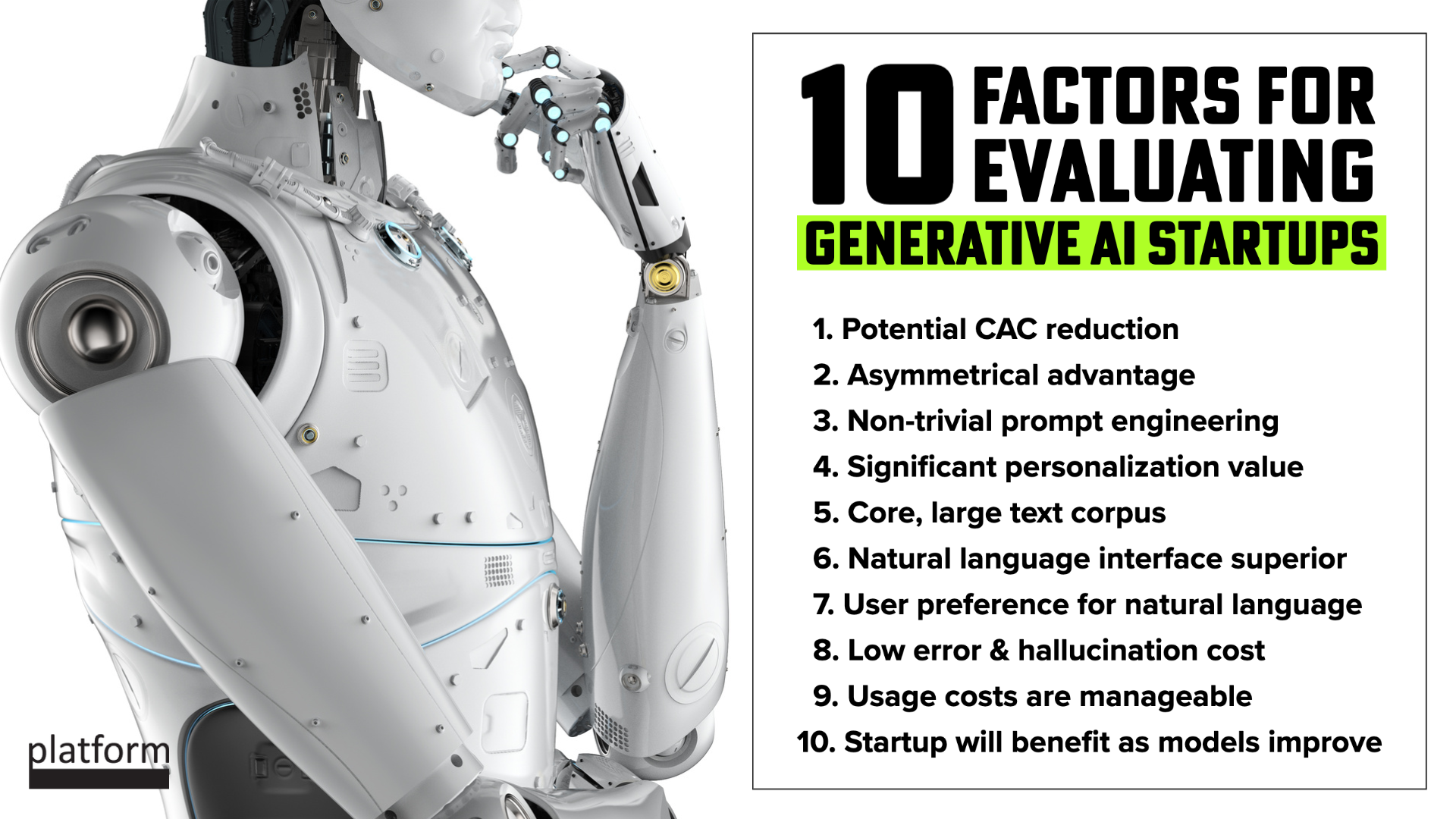

So, what criteria should we use to evaluate a startup leveraging Generative AI today?

Things will change quickly but here is my list for today (May 2023):

- Generative AI drives significant reduction in CAC.

- Generative AI provides an asymmetrical advantage vs simply leading to a zero-sum arms race between competing AI models.

- The prompt engineering required to make it work is non-trivial/non-obvious.

- The value of Generative AI-driven personalization is significant.

- The interpretation, summarization, and recall of large amounts of written text is a core aspect of the problem to be solved (e.g. legal use cases).

- The utility of a natural language interface - one that can handle ambiguity, context, vagueness, etc - is significantly higher than a traditional structured-data interface.

- There is evidence that users would prefer to interact with a NLP/chat interface rather than a traditional UX.

- The cost of errors & hallucinations is low - e.g. it’s not acceptable to prescribe someone the wrong medication.

- The costs of using Generative AI are manageable, either today or in the near future.

- The startup will benefit as Generative AI models continue to improve - i.e. the startup will be carried forward by the wave.

Note that these criteria are additive - the more are true, the more likely I am to spend time digging into the opportunity.

Consultant @ getmiles.com

In digging into insurance, you might find that the GenAI has inaccuracies which are indeed a big problem, however, it hallucinates less than humans make mistakes, so it could be ok.

It reminds me of self driving car debate, where there is the question do you use it or not if it is not good but better than the alternative of humans.

CEO | Founder | Managing Partner @ Platform Venture Studio

Self-driving cars are a poster child because they will likely continue to be worse than humans in some ways but better overall. It’s hard for humans to accept these trade offs.

As an example, there’s currently a flap here in San Francisco about self-driving vehicles getting stuck and blocking emergency vehicles. Of course, the actual incidence is very low and should be counted against the injuries and deaths avoided by human drivers.

It is the classic trolley problem writ large.